New AI-powered creative tools coming to Facebook and Instagram

- Facebook and Instagram are introducing new AI-powered creative tools that allow users to edit their photos and create high-quality videos using text descriptions.

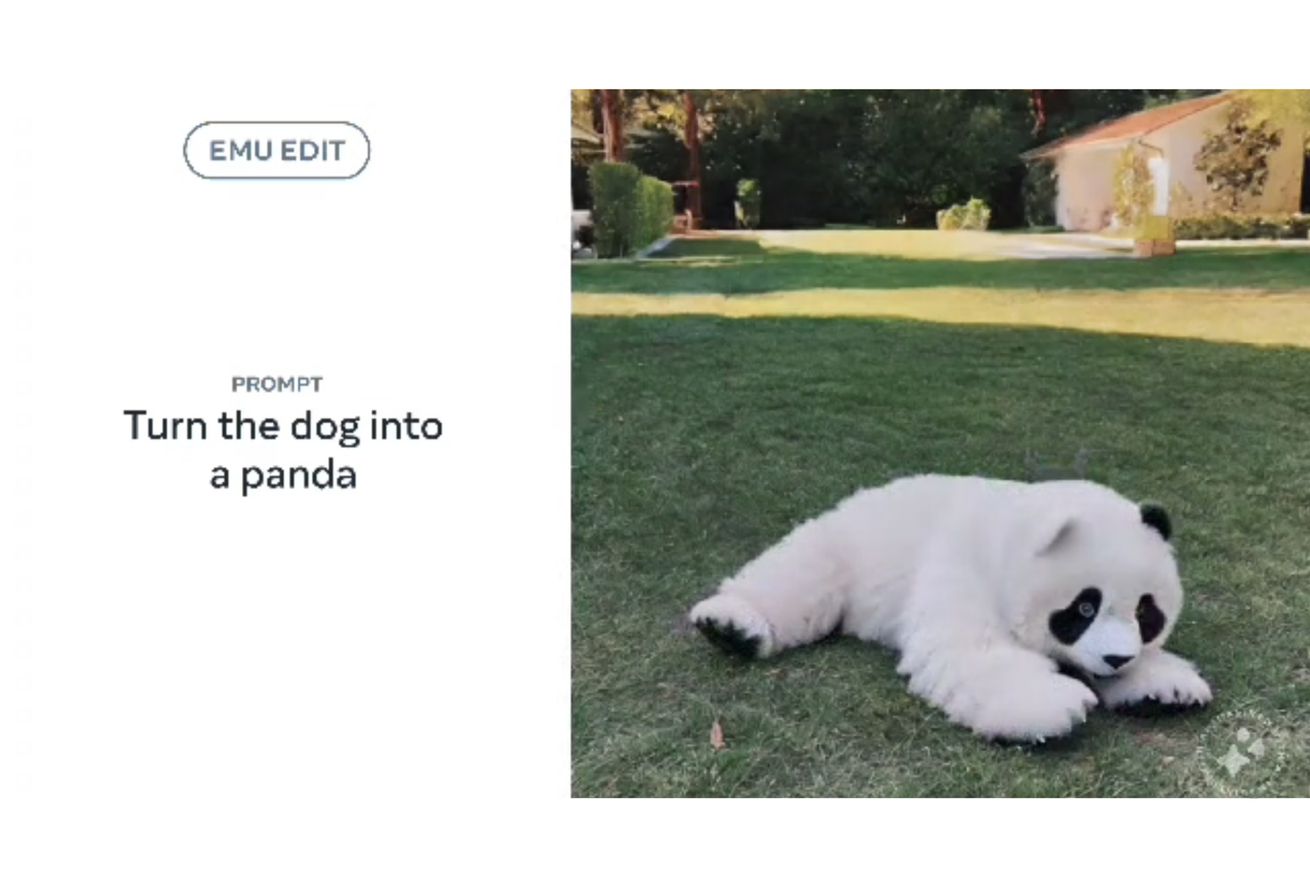

- The first tool, called “Emu Edit,” allows users to make precise alterations to images based on text inputs. It provides similar capabilities to existing tools offered by Adobe, Google, and Canva, enabling users to remove or replace objects and people from photographs.

- Emu Edit is built on Emu, Meta’s foundation model for image generation.

- The second tool, called “Text-to-Video,” allows users to generate animated videos using text descriptions alone. It utilizes AI to bring the text descriptions to life, creating engaging videos without any additional visual assets.

- Both tools are part of Meta’s broader efforts to enhance the creative capabilities of its platforms and empower users to express themselves in new ways.

Emu Edit allows precise image alterations based on text inputs

Facebook and Instagram are introducing a new AI-powered creative tool called “Emu Edit.” This tool allows users to precisely alter images based on text inputs. It is built on Emu, Meta’s foundation model for image generation.

Emu Edit provides similar capabilities to existing tools offered by Adobe, Google, and Canva. It allows users to remove or replace objects and people from photographs without requiring professional image editing experience. The tool leverages AI to understand and interpret the text inputs, enabling users to make precise alterations to their images.

While specific details about how Emu Edit works are not provided in the article, it can be inferred that the tool uses a combination of computer vision and natural language processing to understand the desired changes in the image based on the text inputs provided by the user.

Text-to-Video generates animated videos using text descriptions

Another AI-powered creative tool being introduced by Facebook and Instagram is “Text-to-Video.” This tool allows users to generate animated videos using only text descriptions. It utilizes AI to bring the text descriptions to life, creating engaging videos without the need for additional visual assets.

Text-to-Video is a powerful tool that opens up new possibilities for users who want to create videos but may not have access to video footage or other visual resources. By simply providing text descriptions, users can now generate videos that convey their ideas and stories in an animated format.

The article does not provide specific details on the technology behind Text-to-Video, but it can be assumed that the tool uses natural language processing and computer vision to analyze and interpret the text descriptions, generating corresponding animated visuals based on the input provided by the user.

Meta’s focus on enhancing creative capabilities and self-expression

The introduction of these new AI-powered tools by Facebook and Instagram is part of Meta’s broader efforts to enhance the creative capabilities of its platforms. By leveraging AI technology, Meta aims to empower users to express themselves in new and innovative ways, even if they do not have professional editing or video production skills.

These tools, such as Emu Edit and Text-to-Video, provide users with accessible and user-friendly options to edit images and create videos. With Emu Edit, users can easily manipulate and alter their photos based on text inputs, removing or replacing objects and people without the need for complex editing software.

Text-to-Video takes it a step further by allowing users to generate animated videos using only text descriptions. By leveraging AI, Meta is able to bring these text descriptions to life, creating engaging and visually appealing videos that can convey ideas and stories effectively.

Overall, Meta’s focus on enhancing creative capabilities and self-expression demonstrates its commitment to providing users with the tools and resources they need to unleash their creativity and share their unique perspectives with the world.